Risk Management

(Integrated with Independent Audit of AI, algorithmic and autonomous systems

ForHumanity’s purpose is to mitigate downside risks posed by AI, algorithmic and autonomous systems to humans. Organizations will find themselves minimizing risk exposure (of socio-technical systems), when they maximize risk mitigation for humans, society and environment. One of the clear ways to mitigate risks is to implement and operationalize a robust & agile Risk Management framework. Our risk management framework is foundational to Independent Audit of AI Systems.

In FH context, Risk management will enable compliance with audit criteria and provide a sustainable method to prevent, detect and respond to emergent risks. FH Audit criteria, respecting requirements in the new EU AI Regulation, cover all key risk and control domains either with specific criteria or in supporting guidance for auditors, and can be supplemented, as appropriate, to account for local context and an organization’s location in the AI supply chain. Recognising that an increasingly large proportion of AI implementation will involve incorporating one or more trained models into pre-existing or newly developed products and processes.

ISO 31000 provides a great foundation for the corporate risk management process. Risk management in FH context includes identification, evaluation and prioritization of risks followed by a coordinated approach to minimize the adverse impacts contributed by such risks to individuals, society and environment. Using that lens, we build upon ISO 31000 framework comprehensively for AI Risk Management with 3 key focus approaches namely risks from human impact perspective, gathering risk inputs through Diverse Inputs and Multistakeholder Feedback and risks compiled from adverse incidents or post market monitoring.

The following are the key take aways you will have from the risk

management framework:

- Guidance on risk assessment and templates to compile risks,

associated controls and treatment plans across the lifecycle ofAI, algorithmic and autonomous systems (hereafter AAA Systems).

Guidance associated with Diverse Inputs & MultiStakeholder Feedback (DI&MSF) including approach to consider in gathering such inputs.

Guidance on the process to establish the Risk Tolerance and Risk Appetite for each AAA system.

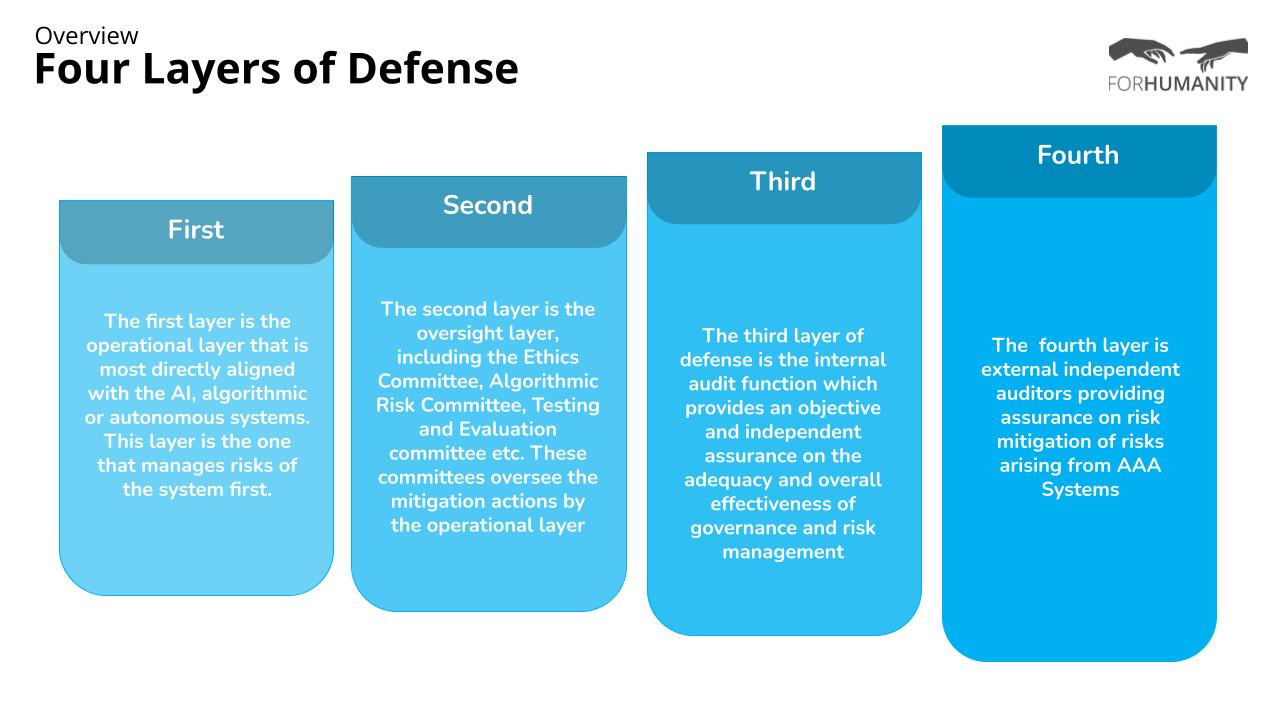

Guidance regarding the responsibilities of the specific committees (eg. Algorithmic Risk Committee) and their interrelationships across the lifecycle in the context of Risk management for AAA systems.

Concept note that explains how (in the context of AAA systems), maximizing risk mitigation will reduce the risk impact to humans, thereby limiting the risk exposure of the organization.

- Guidance on managing the residual risks and associated disclosure to the users/ stakeholders.